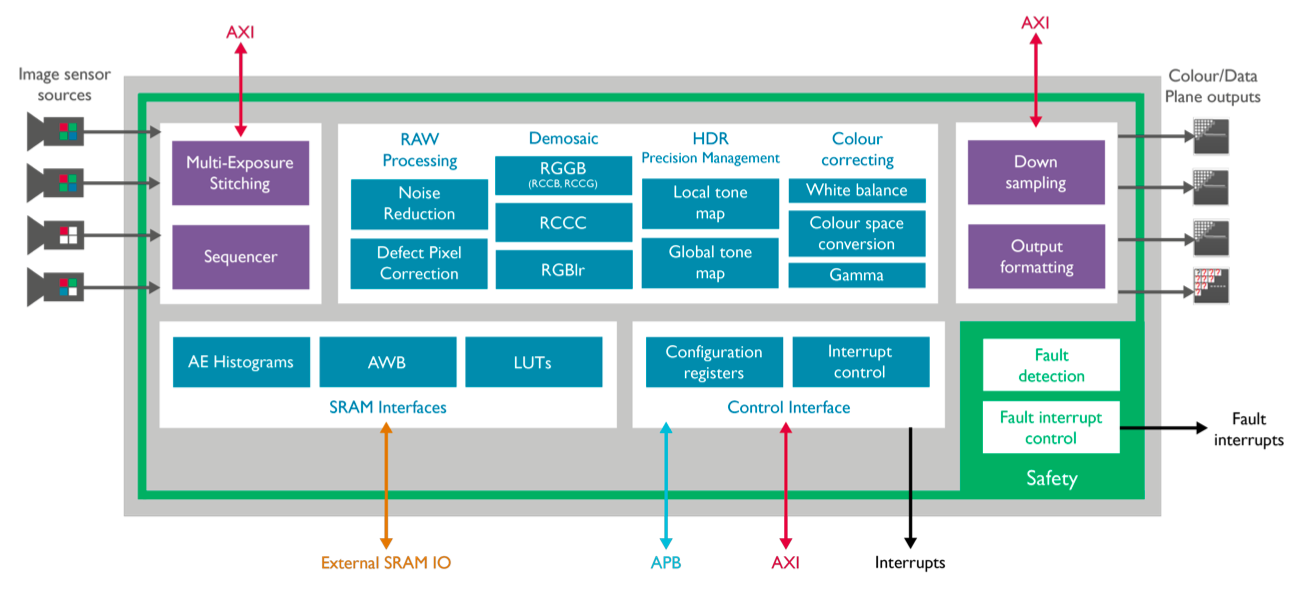

ARM's latest image signal processor (ISP), the Mali-C71, marks the first fruit of the company's year-ago acquisition of Apical. Tailored to optimize images not only for human viewing but also for computer vision algorithms, the Mali-C71 provides expanded capabilities such as wide dynamic range and multi-output support (Figure 1). And, in a nod to the ADAS (advanced driver assistance) and autonomous vehicle applications that the company believes are among its near-term high-volume opportunities, the Mali-C71 is also ASIL D- and SIL3 standards-compliant, and implements numerous reliability enhancements.

Figure 1. ARM's new Mali-C71 ISP supports multiple inputs and outputs, as well as additional capabilities valued by ADAS and other computer vision applications.

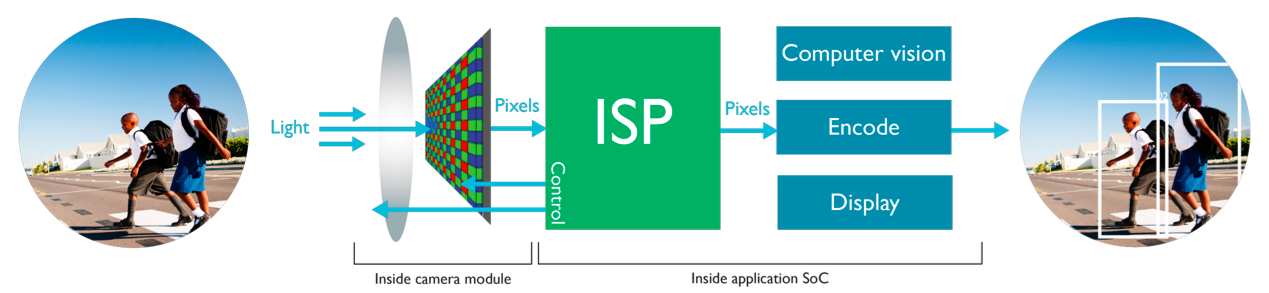

As a recent technical article published by the Embedded Vision Alliance notes, optimum source image quality is essential to enabling robust computer vision results (Figure 2).

Figure 2. The ISP is an important link in the complete image processing and analysis "chain".

The article, "Image Quality Analysis, Enhancement and Optimization Techniques for Computer Vision," also points out, however, that such optimizations can often be at odds with conventional image tuning intended for human viewing purposes:

The bulk of historical industry attention on still and video image capture and processing has focused on hardware and software techniques that improve images' perceived quality as evaluated by the human visual system, thereby also mimicking as closely as possible the human visual system. However, while some of the methods implemented in the conventional image capture-and-processing arena may be equally beneficial for computer vision processing purposes, others may be ineffective, thereby wasting available processing resources, power consumption, etc.

Some conventional enhancement methods may, in fact, be counterproductive for computer vision. Consider, for example, an edge-smoothing or facial blemish-suppressing algorithm: while the human eye might prefer the result, it may hamper the efforts of a vision processor that's searching for objects in a scene or doing various facial analysis tasks. In contrast, various image optimization techniques for computer vision might generate outputs that the human eye and brain would judge as "ugly" but a vision processor would conversely perceive as "improved."

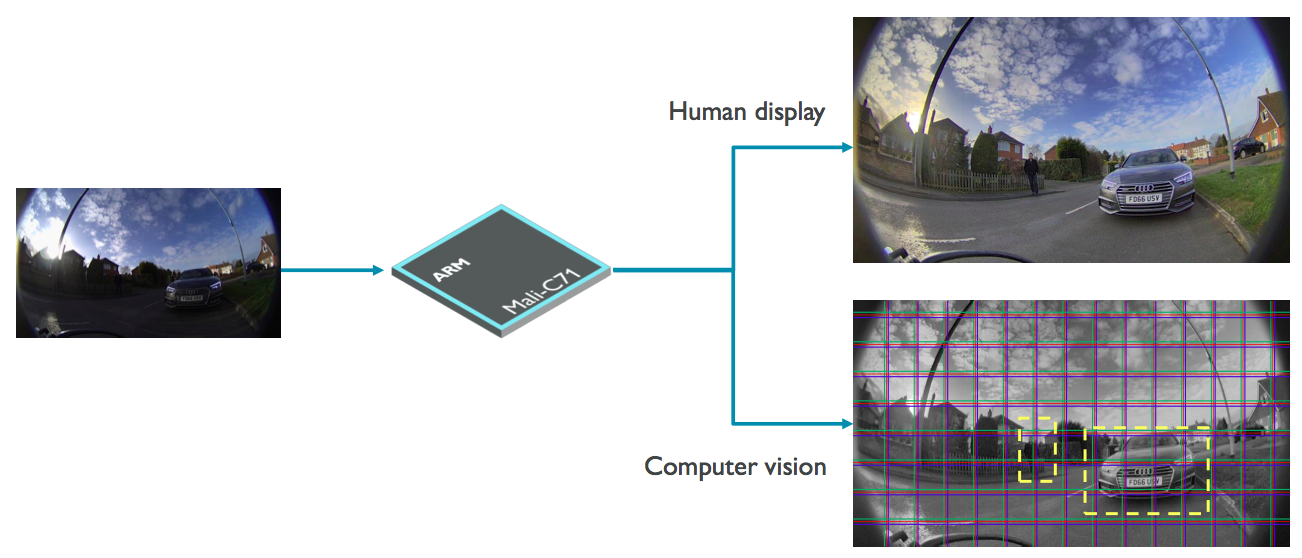

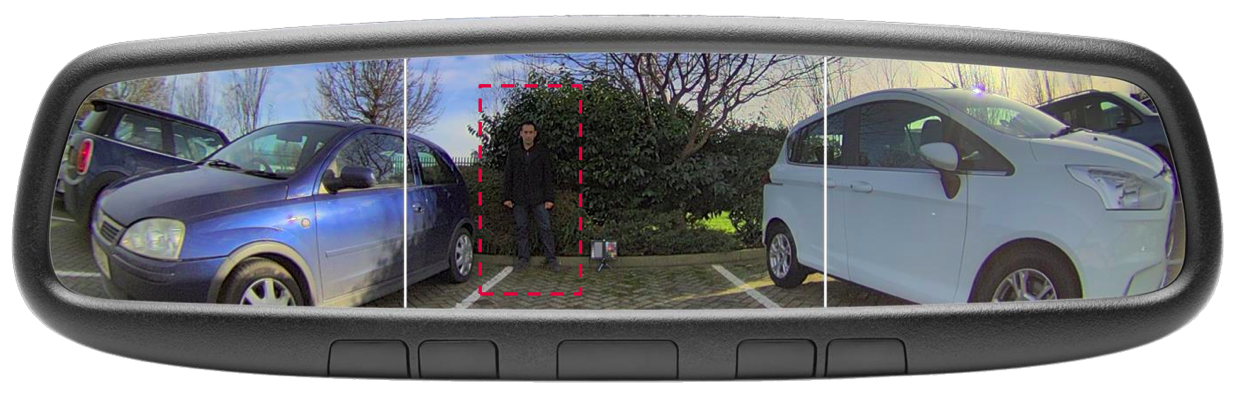

Reflecting this dichotomy, the Mali-C71 supports multiple parallel outputs. One output might be used for viewing on a display, for example, while a second is used for video encode for transmission and archive, and a third for computer vision processing (Figure 3). In the latter case, relatively simply image transformations for computer vision, such as color space alterations and color-to-monochrome conversions, can be done in a single 1.2 Gpixels/sec processing pipeline "pass" along with other image processing operations. More elaborate computer vision-oriented functions such as edge enhancement require an additional pipeline iteration.

Figure 3. ARM's Mali-C71 supports not only conventional image enhancement for human viewing but also a parallel output intended for computer vision analysis.

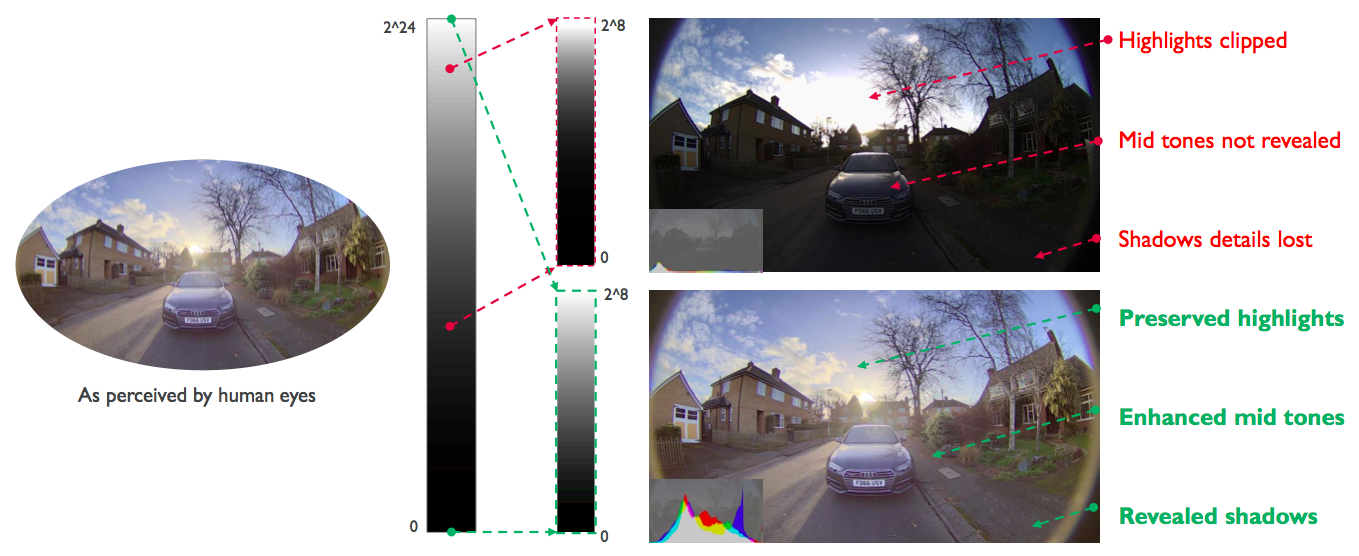

The Mali-C71 also supports multiple image inputs: up to four image sensors streaming real-time information, along with up to sixteen additional non-real-time streams routed over AXI and transferred through external memory from additional Mali-C71 ISPs or other processor cores on the same SoC. One possible use of these multiple inputs is to generate HDR (high dynamic range) images from cameras configured at different exposure settings, along with sensors implementing different color filter arrays; Bayer patterns for full-color spectrum capture, for example, plus white-dominant arrays to capture additional (albeit monochrome) detail. ARM claims that via such multi-sensor combination techniques, Mali-C71 is capable of capturing up to 24 stops of dynamic range, translating to more than 16 million distinct intensity levels (Figure 4). In comparison, the company claims that even the best modern DSLRs (digital single lens reflex cameras) are only capable of capturing 14 stops of dynamic range.

Figure 4. By merging the outputs of multiple image sensors, along with other camera streams, the Mali-C71 delivers greatly expanded dynamic range results (top), revealing important image details (middle) that a traditional approach might obscure (bottom).

In contrast to ARM's (formerly Apical's) ISPs tailored for DSLRs, camera phones and other cost-focused consumer applications, the Mali-C71 includes additional reliability-tailored features required for more demanding applications such as ADAS and autonomous vehicles. Targeting potential systemic faults, Mali-C71 supports the SIL 3 and IEC 61508 standards, along with ASIL D and ISO 26262 specifications. And to comprehend potential random faults, Mali-C71 provides a range of capabilities:

- More than 300 dedicated fault detection circuits, covering both the ISP and connected image sensors

- Built-in continuous self-test for the ISP, along with a fault interrupt controller

- Image plane tagging, to explicitly identify each pixel that the ISP has modified, and

- CRCs (cyclic redundancy checks) of all data paths, including both integrated and external memories as well as ISP configuration blocks

Note that multi-image stitching (for surround viewing of the entire area around a vehicle, for example) is not supported in the Mali-C71; such a function would be implemented elsewhere in the SoC or broader system. Similarly, vehicle, pedestrian and other object identification and tracking functions via traditional computer vision or deep neural networks are the bailiwick of a another computer vision processor core, such as ARM’s special-purpose Spirit (formerly Assertive Engine) block. Mali-C71 is now available for evaluation and licensing; initial licensees have begun integrating the core into their chip designs, according to company officials.

Add new comment